A Critique Report on Gary’s “Deep Learning: A Critical Appraisal”

Tags :

AI Deep learning

Machine learning is an intelligent statistical tool to fit curve(s) on the distribution of data to ‘map’ the input to the expected output. On the other hand, Deep learning, taking its inspiration from the human brain, exploits the usage of many hidden layers of neurons to optimize a complex system to determine the relationship in the data. Yet, in both cases, the mapping is approximate and subjected to inductive bias. It is assumed that the unseen data has a similar distribution/characteristic as the training data, or the model may not perform well at all.

-

Bulk Data is of prime importance here. Lesser data means a lesser population representation, and the model does not learn the mapping well. More data means better approximation and better learning of the properties in it. Functionally deep learning has evolved very much. CNNs have proved to exploit a better ‘prior’ in images than a FCNN like spatial locality, translational invariance, scaling, etc. Usage of Transformers in models (like BERT) has proved better results in NLP tasks.

-

Deep learning, now, is not confined to convolutions only as convolution is not a solution to all problems, for instance, attention models find applications in NLP, speech and image related tasks. Geoffrey Hinton as said in [1] pointed out that convolutions may face inefficacies if the feature selection methodology does not evolve and if datasets are small. In all cases, connectivity of knowledge across domains is still missing. For instance, learning what a Shmister[1] is, is proof for not understanding knowledge representations across domains on a contextual level at that time. We need to understand the data way before any modeling approach.

-

“Transfer of learning” is achievable with little retraining in DNNs in similar tasks, like learning to recognize cats and dogs can be extended to categorizing bananas and oranges. Earlier, in an NLP system, we could not represent words in context-preserving-embeddings, but now models like BERT have proved to be better than humans in tasks like SQuAD [2]. These models need data in hundreds of billions of structures (~infinite) to achieve this level of performance.

-

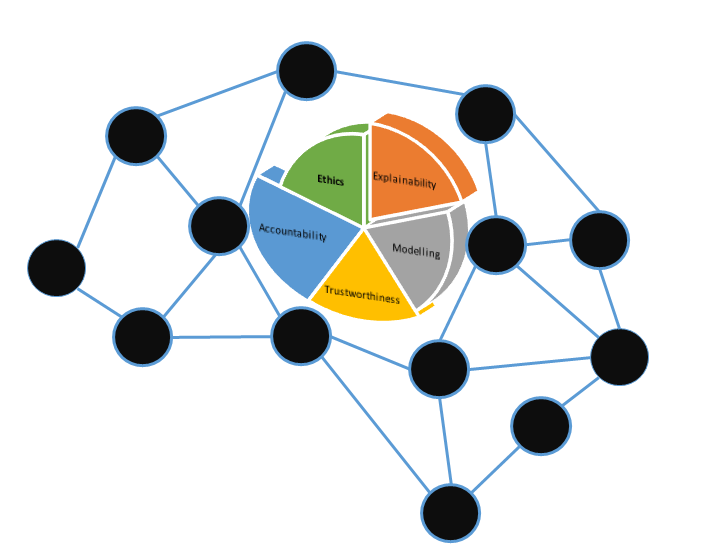

Open-ended inferences are not guaranteed in deep learning. It is easier for us to tell, without thinking, if a baby son is taller than its father or not. But a DNN trained on classifying harder tasks such as image recognition will not be able to do that (unless explicitly trained). This is because of a lack of understanding of incorporating previous disparate knowledge from other systems, unlike humans. Hence, it fails on simple common-sense based questions even after being trained for a far harder problem. The essence of deep neural networks is their intrinsic characteristic to find a correlation between input-output variables based on the target. On an explain-ability level, the representation of information within layers is either abstract (yet) or not entirely known. We know it learns features based on desired target, but when it learns certain features and not others is more-or-less self-contained and not transparent.

-

Correlation does not imply causality and ML (including DL) algorithms cannot exploit causal dependencies in information. A neural net may find the positive correlation for rate of sale of ice-creams and number of deaths but former may not be the cause of the latter. Non-causal features, thus, are removed apriori during cleaning in order to not confuse the system to deduce incorrect inferences.

-

Handling uncertainties is unusual for DL systems in general and Deep-RL based systems, in particular. It will fail for novel test inputs or scenarios with even minor perturbations as demonstrated by [1] even in an advance system as DeepMind’s Atari.

-

DL systems perform well on non-hierarchical inputs. Natural languages in practice, e.g. do not have a perfectly hierarchical structure and are ambiguous so RNNs may not generalize well in language generation tasks. Besides, the world is stochastic and uncertain. DNNs need to be complimented with other methods to deal with such situations. Algorithms (like Extended Kalman Filter used in motion planning) are used that handle uncertainties to some extent, if not fully.

-

Trust Issues: Based on the fact that there is lack of explain-ability and lack of handling uncertainties completely, the predictions are approximate and should not be fully trusted. A DNN can contain information on its connections as much as is permitted by the maximum entropy in the data. But human-mind exploits prior knowledge as a premise. That is why DL suffers from deviation from expectations. An argument from Yann Lecun[3] reads, “If intelligence is a cake, the bulk of the cake is unsupervised learning, the icing on the cake is supervised learning, and the cherry on the cake is reinforcement learning (RL)”.

-

DL should not be a solution to all problems in the universe! It is one of many tools for information extraction problems, the majority of which require inclusion of vast knowledge from other sources. DL deserves exploration and re-thought in many ways. Perfect intelligent systems (like those acting/thinking rationally) as well as those mimicking human-level thinking require a lot of data and information exchange. We should explore how the human mind represents knowledge, integrates previous knowledge with a variety of complex abstractions (at different levels), processes new information, and how it uses all of this to draw inferences involving so many computations in realtime. Integrating other knowledge-based systems with DL is to be focused upon. Markus [1] argues that AI should help us unravel the human mind, but I disagree only until the point that the original way of involving mathematics, human psychology, etc., to drive towards comprehensible ML, though maybe far, but is better and must be going side-by-side to avoid any possibility of a winter for AI.

References

- [1] Marcus, G. (2018). Deep learning: A critical appraisal. arXiv preprint arXiv:1801.00631.

-

[2] Devlin, Jacob. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (Bidirectional Encoder Representations from Transformers). [Seminar Presentation]. Retrieved from Stanford, on 22nd August, 2021.

- [3] Ulhaq, A. (2021). Deep learning, past present and future: An odyssey.

Note: This piece of article is also published on Medium.

Comments